Devices using my home wireless network have suffered from a mysterious quality issue for a few months. It manifests in the form of episodes, which vary a lot in their characteristics, but tend to have high latency (as high as 40 seconds) and sometimes high packet loss (greater than 80%). As Steve Litt tells us, intermittent problems challenge us the most, as unknown factors modulate them. Though the issue persists, I’ve learned a lot in troubleshooting it.

Basic home networks easily vex the newcomer with their complexity. Even products geared toward enthusiasts, such as Linux-based routers, often hide their internal workings behind layers of vendor-branded tools and user interfaces that mean to simplify maintenance. Documentation varies wildly, sometimes assuming familiarity with the esoteric details of kernels and networking theory, yet sometimes oversimplifying. OpenWRT, a Linux distribution popular for routers, could use some help with its documentation.

This makes it difficult to state with confidence what one has actually learned, as no single narrative explains how everything works. Thus, my knowledge must be treated as provisional. If one can learn it by experience, it’ll have to suffice for troubleshooting.

Troubleshooting begins at the client. We should not assume the router or ISP faulty when we have not first sought evidence of a client-side problem. My Dell 9360, for example, has a history of ath10k driver problems. We’d be wise to rule them out.

ip addr can tell you what interfaces

are up. ifconfig also does this, but isn’t available on all systems.dmesg | grep ath10k can be useful, but

not all error messages actually indicate a problem. For instance, mine

constantly spews out Unknown eventid: ..., but works fine.dmesg | grep ...

for your interface can reveal problems here, which suggest a wireless

link problem (low SNR), or router issue.If you can convince yourself that the client works, you move on to observing the link and the router.

Ping the router. Observe rtt (round-trip time; latency) and packet loss.

Ping something on the internet, like google.com or a DNS server such as 1.1.1.1 (Cloudflare) or 8.8.8.8 (Google).

In my case, each of these pings exhibits similarly poor characteristics. By a beginner’s logic, this might suggest a problem in the router, the only common node in each path. However, the complexity of networks puts this thinking into doubt.

One can concoct several theories of network latency. Modern operating systems such as Linux run lots of processes simultaneously. With too high a CPU load, the router might fail to route network traffic in a timely fashion, being too preoccupied running other processes. Depending on the policy of the system under such circumstances, it might even drop packets. Thus, the CPU load theory can explain both latency and packet loss.

To test this theory requires a sufficiently good network connection by

which one can log into the router via SSH to run uptime, which reports

CPU load averages over different time windows. If the load averages

exceed the number of CPU cores in the machine, then circumstances have

overloaded the processor. However, this observation alone does not explain

poor network quality. We’d need to show that the CPU time was being spent

on things unrelated to routing packets. I don’t know how to demonstrate

this. If you do, let me know.

The CPU load theory assumes that the router delivers packets after a delay. This implies a place where packets rest temporarily, waiting to be routed. It turns out that such places exist, and we call them queues or buffers.

The Linux kernel has a sophisticated traffic control

infrastructure.

Many_ _queuing disciplines have been devised, which aim

to achieve certain traffic characteristics. A relatively

new one, fq_codel, has recently become the default on a

large number of Linux systems. It aims to cure a problem, dubbed

“bufferbloat”,

which carries a distinct theory of network latency.

The bufferbloat theory tells a story of long buffers inside the kernel filling up with packets when a network client tries to initiate a large flow of traffic, such as downloading a torrent or watching a YouTube video. It also argues that TCP exacerbates the problem with its retry logic.

I’ve found it difficult to use bufferbloat’s narrative in my situation,

as all of the implicated hosts already use fq_codel as their queuing

discipline. Surely, if fq_codel solves bufferbloat, then something

else must be our problem. Nevertheless, we should attempt to observe

bufferbloat rather than imagine ourselves experiencing it.

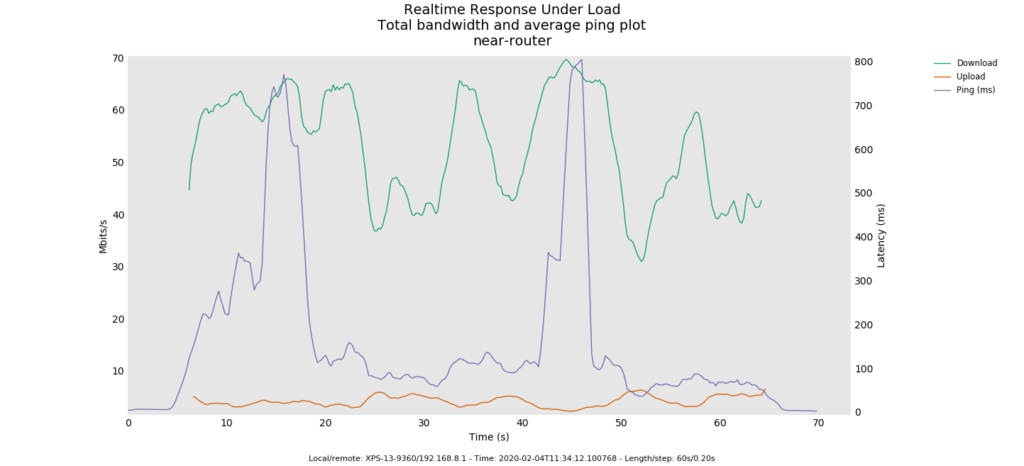

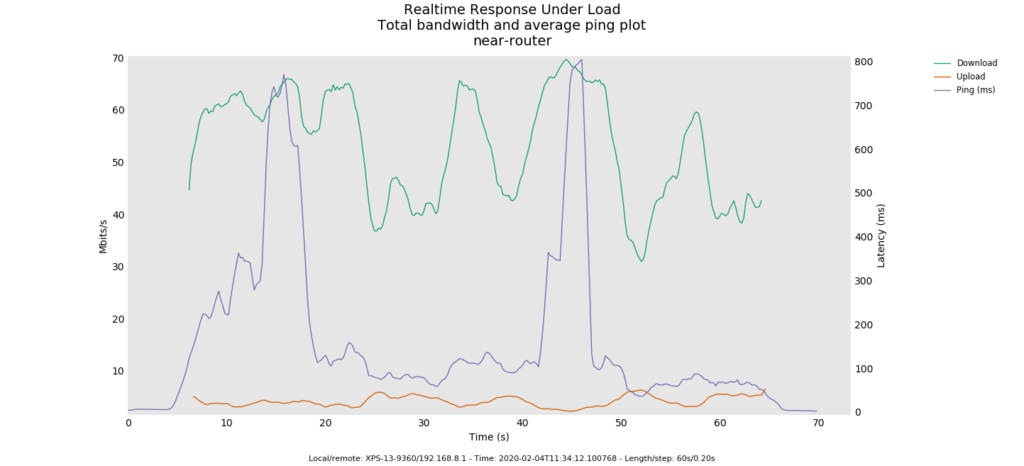

The Flent tool automates this process, with one caveat: it must run against a netperf server. With my luck, the public server at netperf.bufferbloat.net had crashed, so I’d have to set up my own.

I can easily control my own router. However, my distribution of OpenWRT (provided by GL-iNet) lacks a netperf package, meaning I’d have to cross compile it myself. No problem: I used Buildroot to construct a statically-linked MIPS big-endian binary, only half a megabyte in size, sure to deploy easily.

Flent provides a large battery of tests to run. Had I not encountered Dave Taht, one of the bufferbloat researchers, imploring lots of people on the internet to run RRUL, I would have gotten stuck again.

We don’t see an inverse correlation between latency and throughput, so bufferbloat may not be a problem. Nevertheless, the plot shows high latency and jitter.

My current hypothesis is related to heat dissipation. The router in question has no heatsink. When it gets too hot, the SoC will start throttling itself to generate less heat, which will surely affect network performance. I haven’t had time to test this hypothesis, however.

Discuss this page by emailing my public inbox. Please note the etiquette guidelines.

© 2025 Karl Schultheisz